Google Lens Soon to Allow Search with Photos and Text Combined

[ad_1]

![]()

Google Lens, the company’s image recognition technology, will soon allow a smartphone’s camera to not only recognize objects in the real world but be combined with search terms to allow users to ask questions about what the camera sees.

Google will add functionality from its Multitask Unified Model (MUM) to allow its Lens system to understand information from both the visual data as well as text input, greatly expanding the functionality of the technology.

MUM was debuted at Google’s I/O developer conference earlier this year. According to a report from TechCrunch, MUM was built to allow Google’s technologies to understand information from a wide range of formats simultaneously. Anything from text, images, and videos could be drawn together to connect with topics, concepts, and ideas. When it was announced it was a broad idea of possibilities, but now MUM is making its way into a more tangible real-world application in its combination with Google Lens.

Google says that it will use MUM to upgrade Google Lens with the ability to add text to visual searches so that users can provide more context and ask questions about a scene to help Google provide more targeted results.

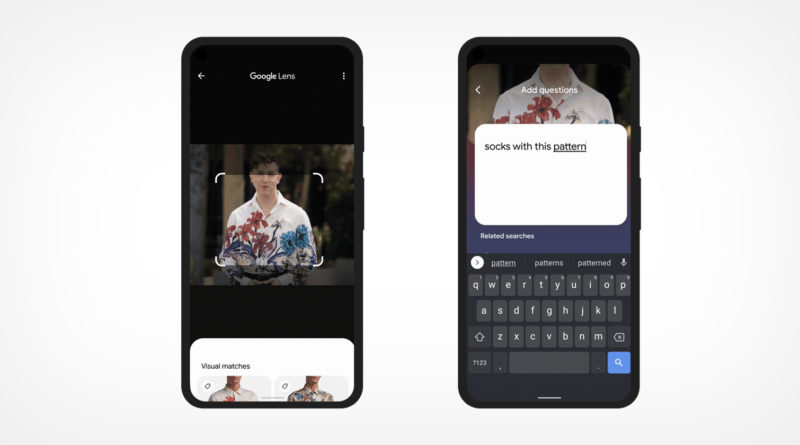

The process could make searching for a pattern or a piece of clothing much easier as demonstrated in an example provided by the company. The idea is that a user could pull up a photo of a piece of clothing in Google Search, then a user could tap on the Lens icon to ask Google to find the same pattern, but on a different article of clothing.

![]()

As TechCrunch explains, by typing something like “socks with this pattern,” a user could tell Google to find more relevant results than if they had been searching based on the image or text alone. In this case, text alone would be near impossible to use to generate the desired search return and by itself, the photo wouldn’t have enough context either.

Google also provided a second example. If a user was trying to find a part to repair a broken bike, but the user doesn’t know what the broken part is called, Google Lens powered by MUM would allow the user to take a photo of the part and add the query “how to fix” to it, which would possibly connect the user to the exact moment in a repair video that would answer the question.

![]()

Google believes that this technology would fill a much-needed gap in how its services interact with users. Often there is a component to a query that can only be adequately expressed visually but is difficult to describe without the ability to narrow search results with text.

The company said that it hopes to put MUM to work across other Google services in the future as well. The Google Lens MUM update is slated to roll out in the coming months, but no specific timeline was provided as additional testing and evaluation was still needed before public deployment.

[ad_2]

Source link